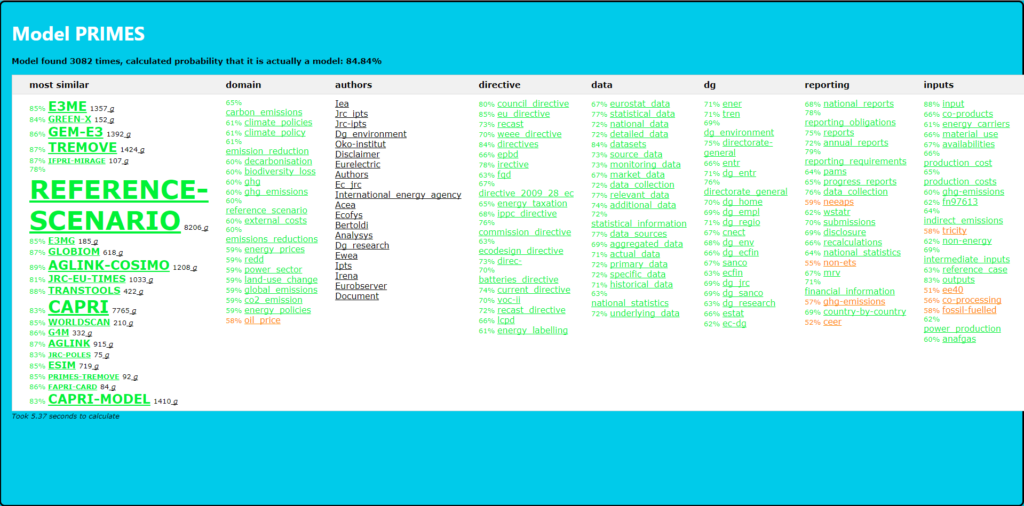

Imagine you spent eight days training your word embedding on 24 CPU server. Now you have a model and keep dreaming what to do with it. My colleagues, who are cataloguing computational models used in impact assessments and preparatory studies, spend days and weeks discovering these models by reading the relevant documents. This neural network quickly allows not only word similarity searches, so once we know a name of just one model, we can have all of them, but also distance queries showing what is relevant. Who are the authors? In what domain is the model most used? What data it uses and in what reporting? Which DGs use the model? So many questions and, shockingly, so many answers in less than six seconds per query on my laptop: